DESCRIPTION

Brian M. Hopkinson, Andrew C. King, Daniel P. Owen, Matthew Johnson-Roberson, Matthew H. Long, Suchendra M. Bhandarkar

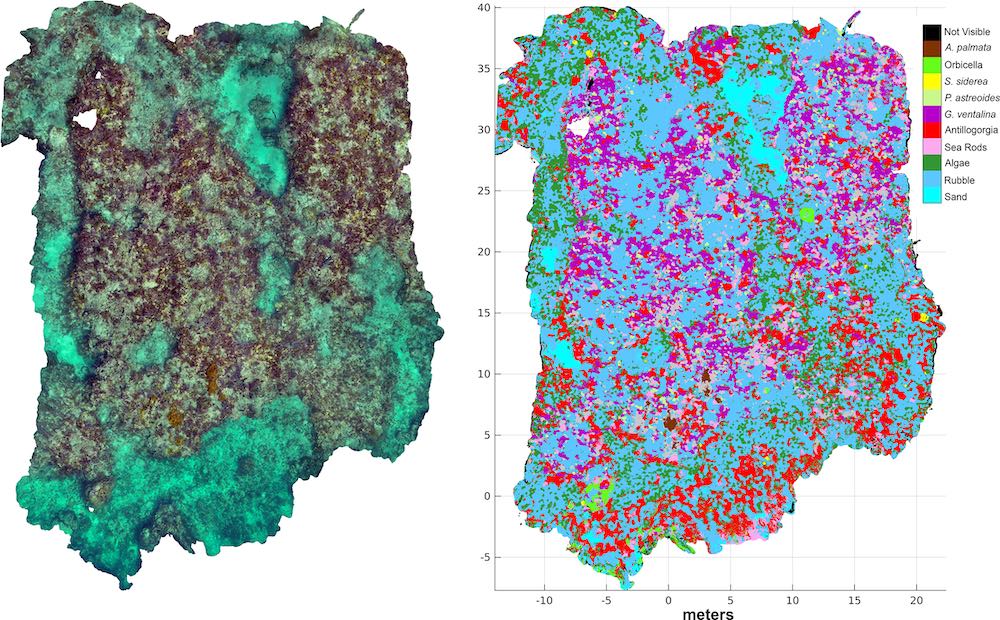

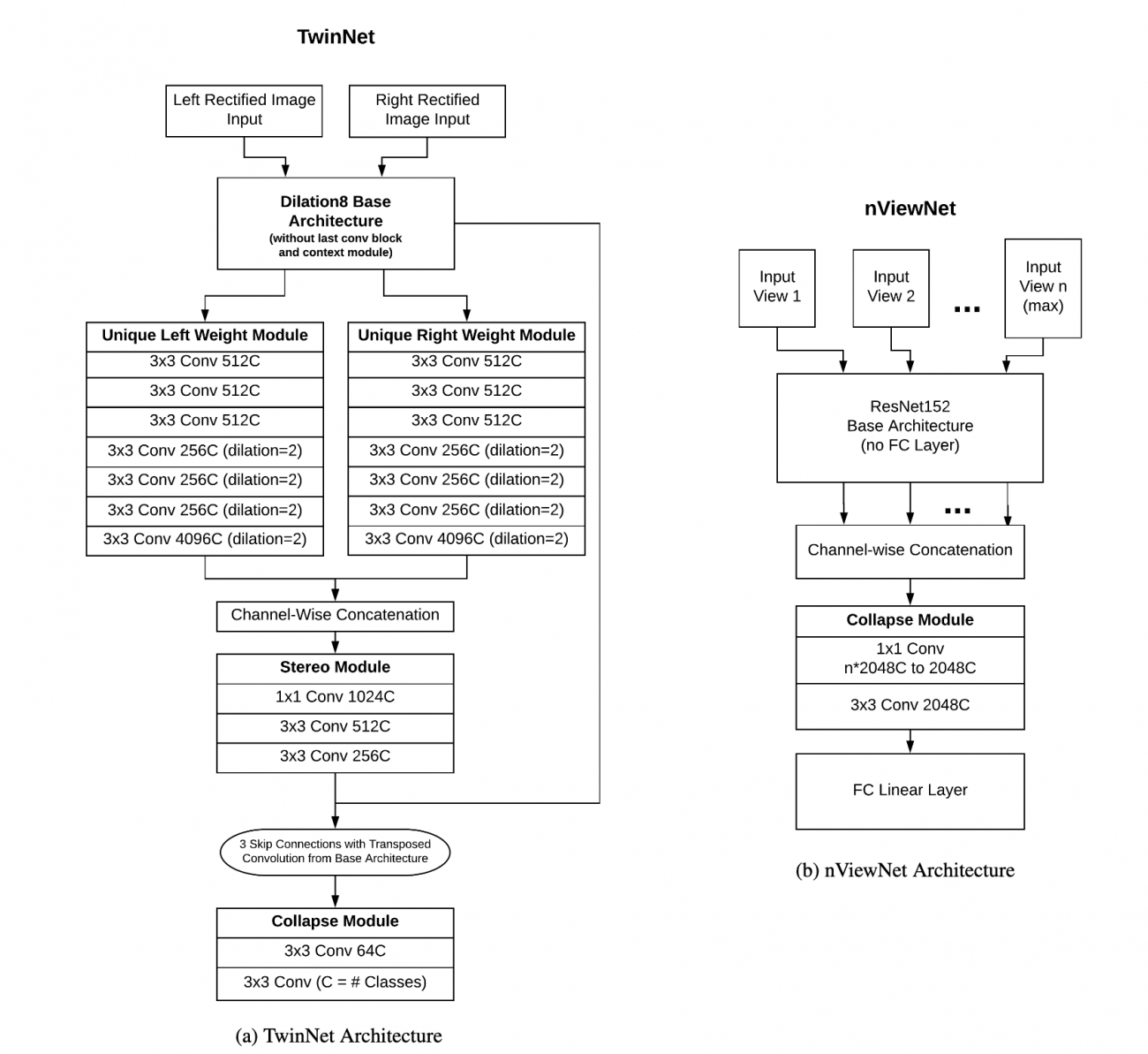

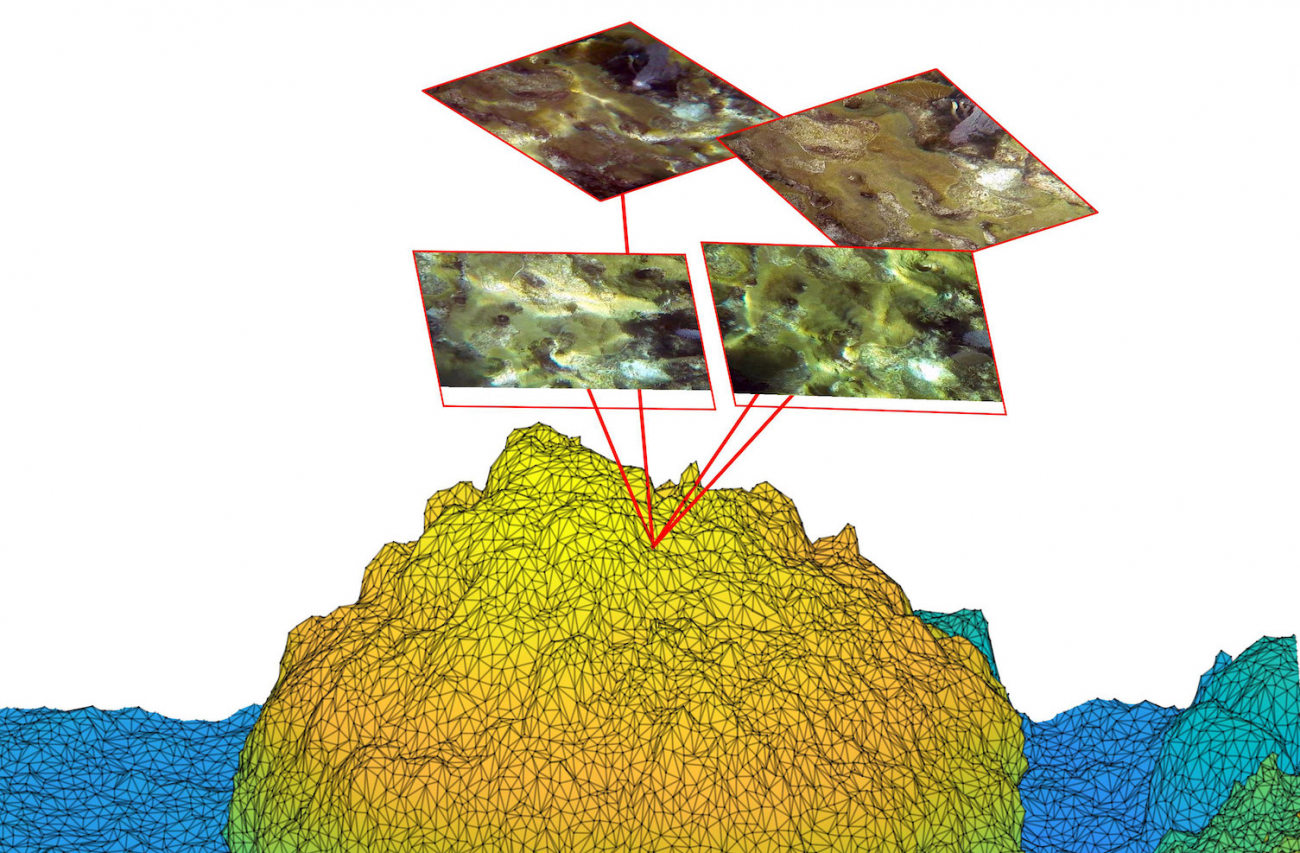

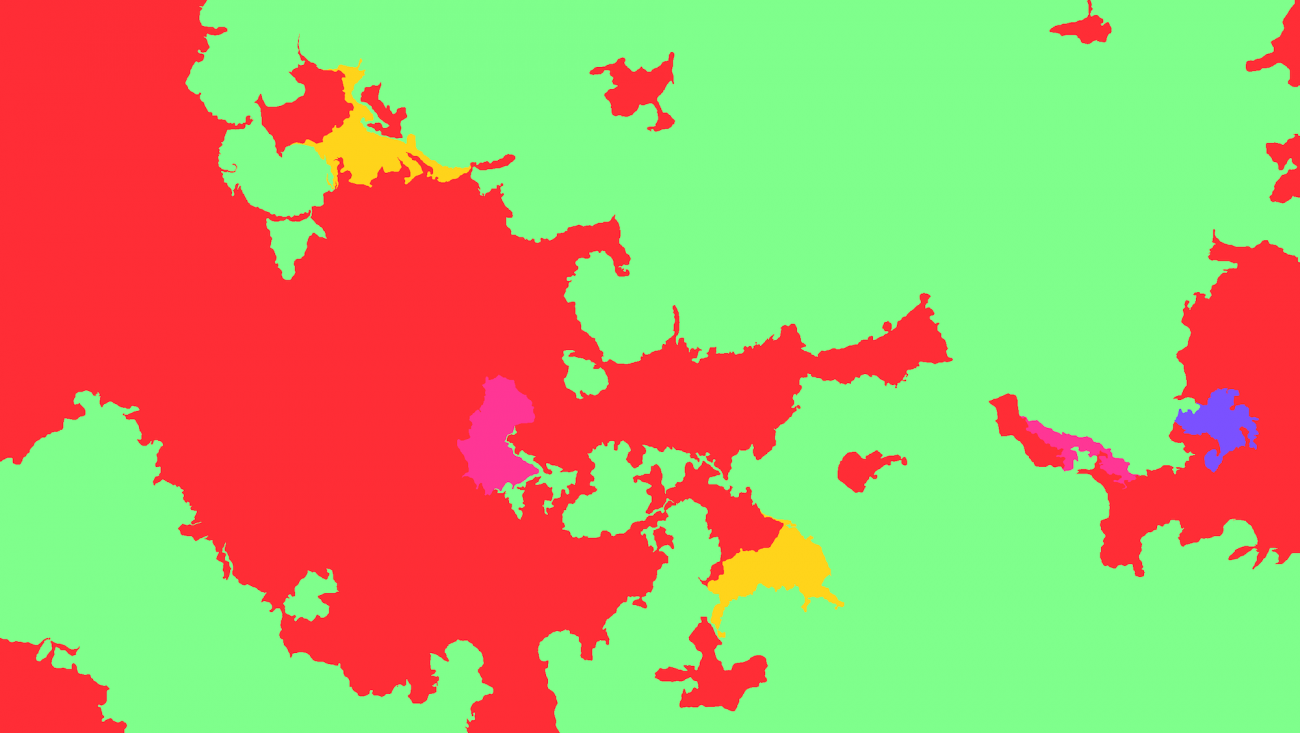

Coral reefs are biologically diverse and structurally complex ecosystems, which have been severally affected by human actions. Consequently, there is a need for rapid ecological assessment of coral reefs, but current approaches require time consuming manual analysis, either during a dive survey or on images collected during a survey. Reef structural complexity is essential for ecological function but is challenging to measure and often relegated to simple metrics such as rugosity. Recent advances in computer vision and machine learning offer the potential to alleviate some of these limitations. We developed an approach to automatically classify 3D reconstructions of reef sections and assessed the accuracy of this approach. 3D reconstructions of reef sections were generated using commercial Structure-from-Motion software with images extracted from video surveys. To generate a 3D classified map, locations on the 3D reconstruction were mapped back into the original images to extract multiple views of the location. Several approaches were tested to merge information from multiple views of a point into a single classification, all of which used convolutional neural networks to classify or extract features from the images, but differ in the strategy employed for merging information. Approaches to merging information entailed voting, probability averaging, and a learned neural-network layer. All approaches performed similarly achieving overall classification accuracies of ~96% and >90% accuracy on most classes. With this high classification accuracy, these approaches are suitable for many ecological applications.

TECHNOLOGY

Open Access Version